Publications

2025

- Under review

Clustering is back: Reaching state-of-the-art LiDAR instance segmentation without trainingUnder review, 2025

Clustering is back: Reaching state-of-the-art LiDAR instance segmentation without trainingUnder review, 20251st place in the SemanticKITTI panoptic segmentation benchmark

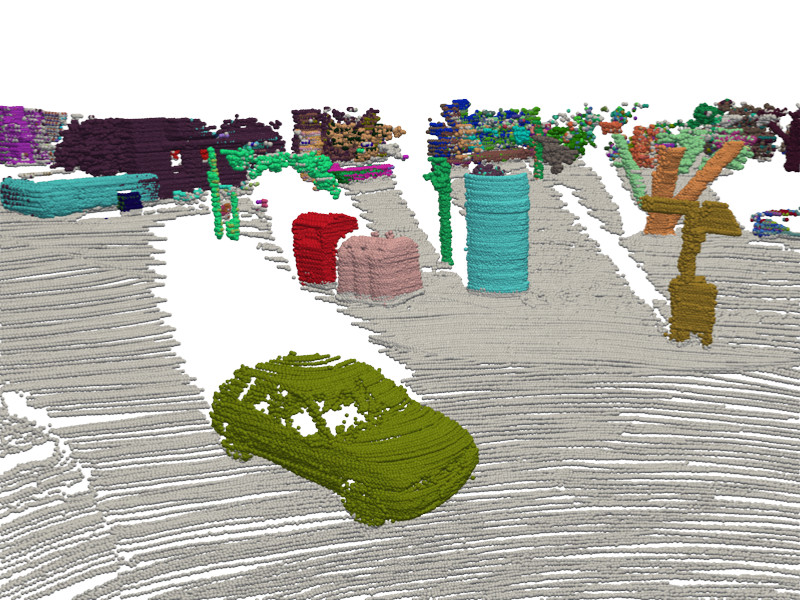

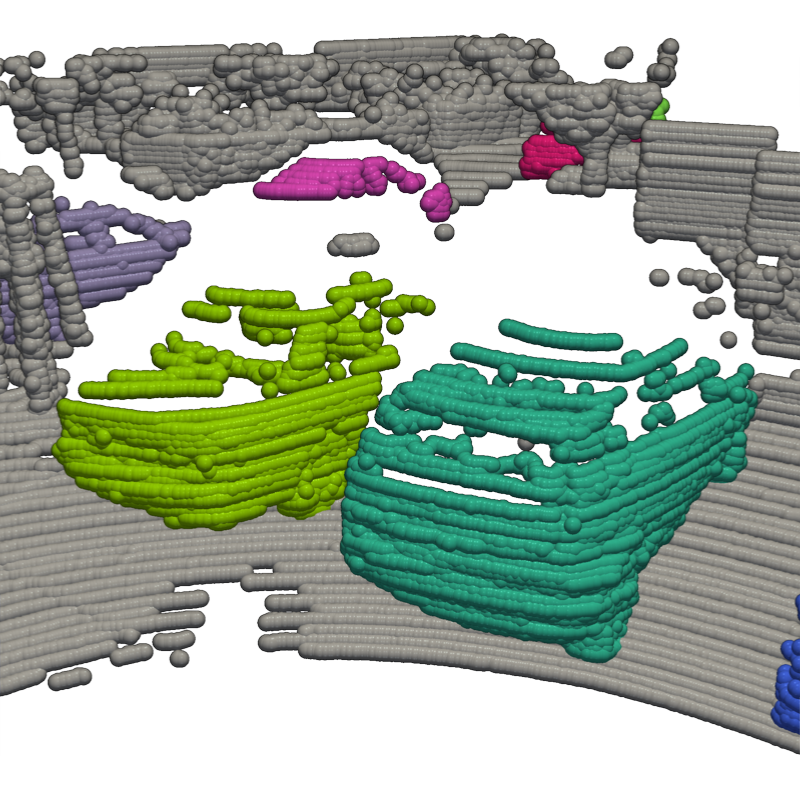

Panoptic segmentation of LiDAR point clouds is fundamental to outdoor scene understanding, with autonomous driving being a primary application. While state-of-the-art approaches typically rely on end-to-end deep learning architectures and extensive manual annotations of instances, the significant cost and time investment required for labeling large-scale point cloud datasets remains a major bottleneck in this field. In this work, we demonstrate that competitive panoptic segmentation can be achieved using only semantic labels, with instances predicted without any training or annotations. Our method outperforms state-of-the-art supervised methods on standard benchmarks including SemanticKITTI and nuScenes, and outperforms every publicly available method on SemanticKITTI as a drop-in instance head replacement, while running in real-time on a single-threaded CPU and requiring no instance labels. It is fully explainable, and requires no learning or parameter tuning. Alpine combined with state-of-the-art semantic segmentation ranks first on the official panoptic segmentation leaderboard of SemanticKITTI.

@article{sautier2025Alpine, title = {Clustering is back: Reaching state-of-the-art LiDAR instance segmentation without training}, author = {Sautier, Corentin and Puy, Gilles and Boulch, Alexandre and Marlet, Renaud and Lepetit, Vincent}, year = {2025}, journal = {Under review}, } - UNIT: unsupervised Online Instance Segmentation through TimeIn International Conference on 3D Vision, 2025

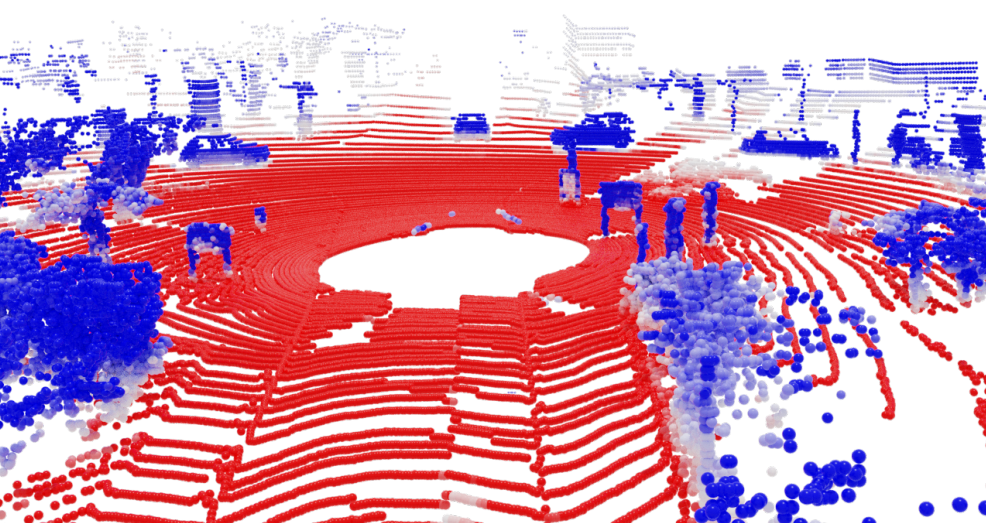

Online object segmentation and tracking in Lidar point clouds enables autonomous agents to understand their surroundings and make safe decisions. Unfortunately, manual annotations for these tasks are prohibitively costly. We tackle this problem with the task of class-agnostic unsupervised online instance segmentation and tracking. To that end, we leverage an instance segmentation backbone and propose a new training recipe that enables the online tracking of objects. Our network is trained on pseudo-labels, eliminating the need for manual annotations. We conduct an evaluation using metrics adapted for temporal instance segmentation. Computing these metrics requires temporally-consistent instance labels. When unavailable, we construct these labels using the available 3D bounding boxes and semantic labels in the dataset. We compare our method against strong baselines and demonstrate its superiority across two different outdoor Lidar datasets.

@inproceedings{sautier2025UNIT, title = {UNIT: unsupervised Online Instance Segmentation through Time}, author = {Sautier, Corentin and Puy, Gilles and Boulch, Alexandre and Marlet, Renaud and Lepetit, Vincent}, year = {2025}, booktitle = {International Conference on 3D Vision}, url = {https://CSautier.github.io/unit}, }

2024

- Three Pillars improving Vision Foundation Model Distillation for LidarGilles Puy, Spyros Gidaris, Alexandre Boulch, Oriane Siméoni, Corentin Sautier, Patrick Pérez, Andrei Bursuc, and Renaud MarletIn Conference on Computer Vision and Pattern Recognition, 2024

Self-supervised image backbones can be used to address complex 2D tasks (e.g., semantic segmentation, object discovery) very efficiently and with little or no downstream supervision. Ideally, 3D backbones for lidar should be able to inherit these properties after distillation of these powerful 2D features. The most recent methods for image-to-lidar distillation on autonomous driving data show promising results, obtained thanks to distillation methods that keep improving. Yet, we still notice a large performance gap when measuring the quality of distilled and fully supervised features by linear probing. In this work, instead of focusing only on the distillation method, we study the effect of three pillars for distillation: the 3D backbone, the pretrained 2D backbones, and the pretraining dataset. In particular, thanks to our scalable distillation method named ScaLR, we show that scaling the 2D and 3D backbones and pretraining on diverse datasets leads to a substantial improvement of the feature quality. This allows us to significantly reduce the gap between the quality of distilled and fully-supervised 3D features, and to improve the robustness of the pretrained backbones to domain gaps and perturbations.

@inproceedings{puy2024revisiting, title = {Three Pillars improving Vision Foundation Model Distillation for Lidar}, author = {Puy, Gilles and Gidaris, Spyros and Boulch, Alexandre and Siméoni, Oriane and Sautier, Corentin and Pérez, Patrick and Bursuc, Andrei and Marlet, Renaud}, booktitle = {Conference on Computer Vision and Pattern Recognition}, year = {2024}, } - BEVContrast: Self-Supervision in BEV Space for Automotive Lidar Point CloudsIn International Conference on 3D Vision, 2024

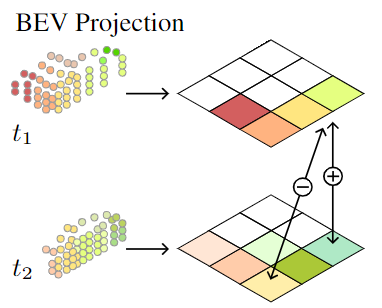

We present a surprisingly simple and efficient method for self-supervision of 3D backbone on automotive Lidar point clouds. We design a contrastive loss between features of Lidar scans captured in the same scene. Several such approaches have been proposed in the literature from PointConstrast, which uses a contrast at the level of points, to the state-of-the-art TARL, which uses a contrast at the level of segments, roughly corresponding to objects. While the former enjoys a great simplicity of implementation, it is surpassed by the latter, which however requires a costly pre-processing. In BEVContrast, we define our contrast at the level of 2D cells in the Bird’s Eye View plane. Resulting cell-level representations offer a good trade-off between the point-level representations exploited in PointContrast and segment-level representations exploited in TARL: we retain the simplicity of PointContrast (cell representations are cheap to compute) while surpassing the performance of TARL in downstream semantic segmentation.

@inproceedings{BEVContrast, author = {Sautier, Corentin and Puy, Gilles and Boulch, Alexandre and Marlet, Renaud and Lepetit, Vincent}, title = {{BEVContrast}: Self-Supervision in BEV Space for Automotive Lidar Point Clouds}, booktitle = {International Conference on 3D Vision}, year = {2024}, }

2023

- ALSO: Automotive Lidar Self-supervision by Occupancy estimationIn Conference on Computer Vision and Pattern Recognition, 2023

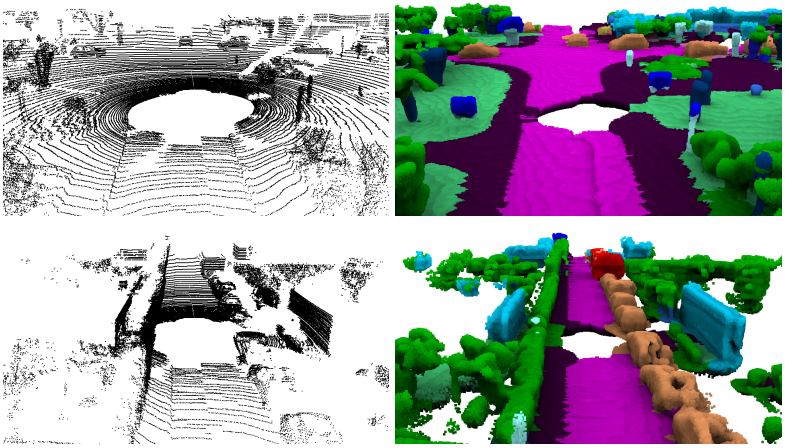

We propose a new self-supervised method for pre-training the backbone of deep perception models operating on point clouds. The core idea is to train the model on a pretext task which is the reconstruction of the surface on which the 3D points are sampled, and to use the underlying latent vectors as input to the perception head. The intuition is that if the network is able to reconstruct the scene surface, given only sparse input points, then it probably also captures some fragments of semantic information, that can be used to boost an actual perception task. This principle has a very simple formulation, which makes it both easy to implement and widely applicable to a large range of 3D sensors and deep networks performing semantic segmentation or object detection. In fact, it supports a single-stream pipeline, as opposed to most contrastive learning approaches, allowing training on limited resources. We conducted extensive experiments on various autonomous driving datasets, involving very different kinds of lidars, for both semantic segmentation and object detection. The results show the effectiveness of our method to learn useful representations without any annotation, compared to existing approaches.

@inproceedings{ALSO, author = {Boulch, Alexandre and Sautier, Corentin and Michele, Björn and Puy, Gilles and Marlet, Renaud}, title = {{ALSO}: Automotive Lidar Self-supervision by Occupancy estimation}, booktitle = {Conference on Computer Vision and Pattern Recognition}, year = {2023}, }

2022

- Image-to-Lidar Self-Supervised Distillation for Autonomous Driving DataIn Conference on Computer Vision and Pattern Recognition, 2022

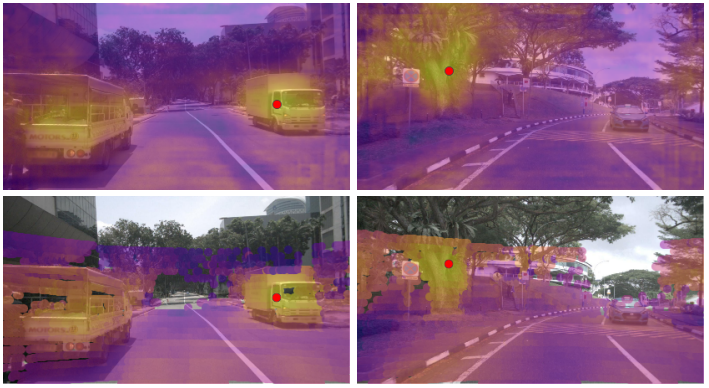

Segmenting or detecting objects in sparse Lidar point clouds are two important tasks in autonomous driving to allow a vehicle to act safely in its 3D environment. The best performing methods in 3D semantic segmentation or object detection rely on a large amount of annotated data. Yet annotating 3D Lidar data for these tasks is tedious and costly. In this context, we propose a self-supervised pre-training method for 3D perception models that is tailored to autonomous driving data. Specifically, we leverage the availability of synchronized and calibrated image and Lidar sensors in autonomous driving setups for distilling self-supervised pre-trained image representations into 3D models. Hence, our method does not require any point cloud nor image annotations. The key ingredient of our method is the use of superpixels which are used to pool 3D point features and 2D pixel features in visually similar regions. We then train a 3D network on the self-supervised task of matching these pooled point features with the corresponding pooled image pixel features. The advantages of contrasting regions obtained by superpixels are that: (1) grouping together pixels and points of visually coherent regions leads to a more meaningful contrastive task that produces features well adapted to 3D semantic segmentation and 3D object detection; (2) all the different regions have the same weight in the contrastive loss regardless of the number of 3D points sampled in these regions; (3) it mitigates the noise produced by incorrect matching of points and pixels due to occlusions between the different sensors. Extensive experiments on autonomous driving datasets demonstrate the ability of our image-to-Lidar distillation strategy to produce 3D representations that transfer well on semantic segmentation and object detection tasks.

@inproceedings{SLidR, author = {Sautier, Corentin and Puy, Gilles and Gidaris, Spyros and Boulch, Alexandre and Bursuc, Andrei and Marlet, Renaud}, title = {Image-to-Lidar Self-Supervised Distillation for Autonomous Driving Data}, booktitle = {Conference on Computer Vision and Pattern Recognition}, year = {2022}, }

2020

- State Prediction in TextWorld with a Predicate-Logic Pointer Network ArchitectureCorentin Sautier, Don Joven Agravante, and Michiaki TatsuboriIn Workshop on Knowledge-based Reinforcement Learning at IJCAI 2020, 2020

Our work builds toward AI agents that can take advantage of data and deep learning while making use of the structure, extensibility and explainability of logical models with automated planning. We believe such agents have the potential to surpass many of the inherent limitations of current RL-based agents. Towards this long term goal, we aim to improve the capability of deep learning as a front-end to produce logical state representations required by planners. Specifically, we are interested in text-based games where we use Transformers to translate the unstructured textual description in the game into logical states. To improve the neural network architecture for this problem setting, we propose to augment the Transformer with a pointer network in a two-staged architecture. Our results show a clear improvement over a baseline Transformer network.

@inproceedings{sautier_ijcai2020, title = {State Prediction in TextWorld with a Predicate-Logic Pointer Network Architecture}, author = {Sautier, Corentin and Agravante, Don Joven and Tatsubori, Michiaki}, booktitle = {Workshop on Knowledge-based Reinforcement Learning at IJCAI 2020}, year = {2020}, }